It was July of 2016, and by our rough calculations we had about twelve months to develop a reliable robot for the competition the next year in Nagoya, Japan. We briefly discussed developing the robot out of a new material, CPE which boasted a high degree of resistance to heat and chemicals. While the competition itself didn’t really pose a large danger to our robot being subjected to high temperatures or being doused in large quantities of acid, we thought it would be a good selling point to potential investors looking to use our robot for remote reconnaissance in the field.

Other topics discussed included (rather ludicrously, we would eventually discover) a system for flexible belts to be quickly and easily attached to the wheels as well as our own printed circuit board for all other devices to attach to. Rather less ludicrously and slightly more achievable was an upgrade to the internal computer – we briefly considered a Raspberry Pi 3, which had released six months earlier, and the integration of mapping capable sensors (whether lasers or sonar we didn’t know at this point).

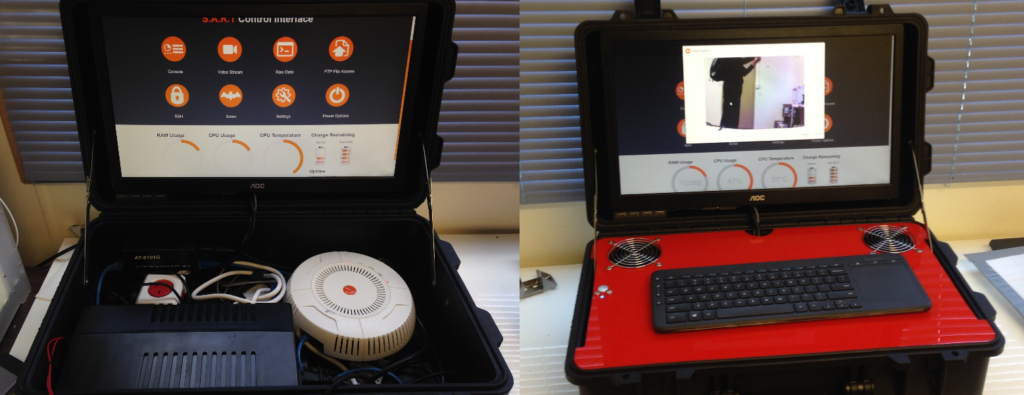

As a pseudo-feasibility study, we toyed with the idea of having a dedicated control panel, encased in its own carrying case. It was theorised this could contain a small computer, a display and a Wi-Fi access point for a dedicated, high powered point to point communication network between the robot and control panel.

It was at this point a Graham Stock joined the team – well, I say joined, but what I really mean is created a management position for himself. Graham was the STEM coordinator and physics teacher at SFX, and he showed a great interest in mentoring us in this project.

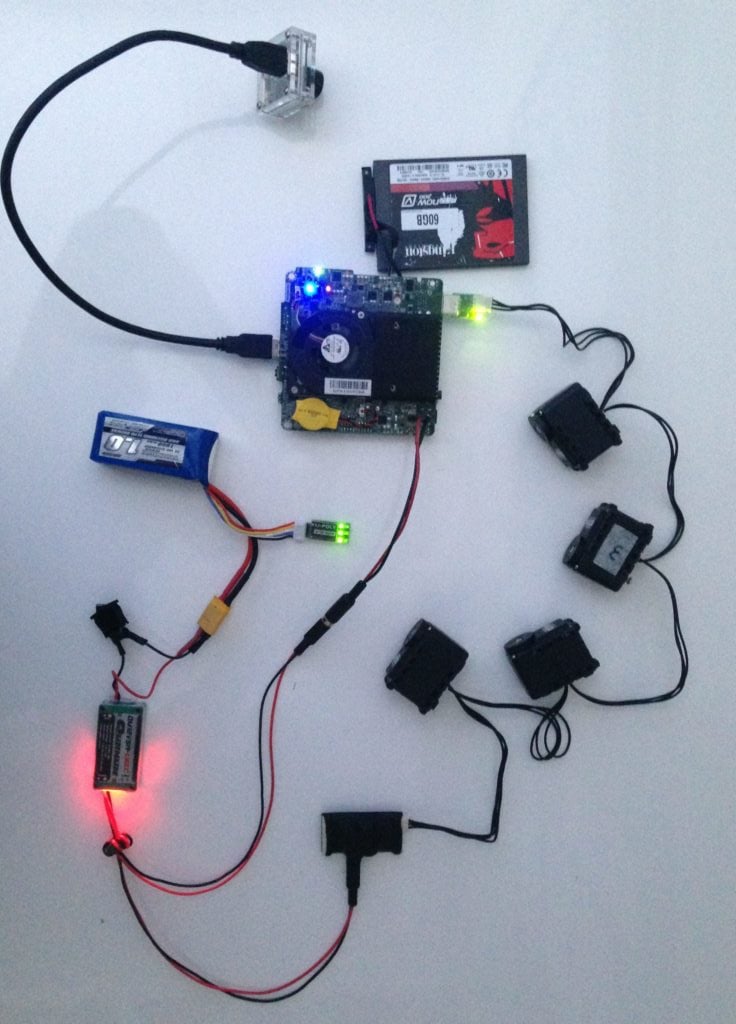

Gerard proposed deploying an Intel NUC in the robot, instead of a Raspberry Pi. This brought on a multitude of positives, such as increased versatility with the greater processing power but at the expense of increased mass, cost, and power consumption. Naturally, we all thought this was a brilliant idea, but not only because Gerry had suggested it. Given the size of the battery we were to integrate into the robot itself, I used Intel’s datasheet for the NUC model to roughly work out that we’d be able to power the NUC for about an hour.

With this increased mass (from the NUC and battery), we would need more powerful servos. Up until this point we had been using Dynamixel AX12As, which had plastic gearing and, for our uses, not enough RPMs and torque. I had thought the AX18As that we were upgrading to had metal gears, however it turned out that it was the next model up that introduced that feature – for a hefty increase in price. Therefore, we settled on an upgrade in speed and power and just had to hope the internal gears didn’t chew themselves to pieces over the next few months.

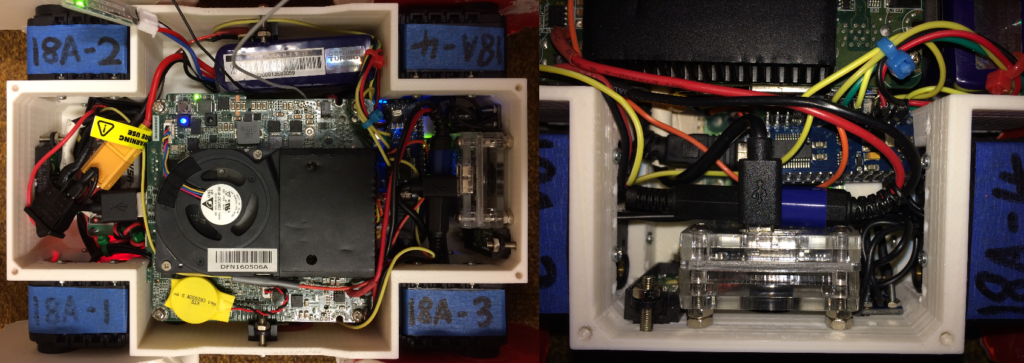

Jack directed the development of the control panel, which we had initially visualised as a James Bond style drone station with joystick controls, integrated computer, keyboard and mouse, screen, WiFi access point and battery…

Yes. Yes, I know. We made a laptop.

It was not just any laptop though. It weighed over 15 kilograms, boasting an enterprise level access point, a giant honking 1000VA UPS and an anaemic BreezeLite Mini PC we had originally intended to use in the robot, but due to its fettered BIOS we couldn’t reliably install anything other than Windows – and we needed Ubuntu. But, as cutting edge technology development goes, one often hits little snags – and this was but a small setback. Graham, in his infinite capacity for reason and common sense and gravity-generating genius suggested we simply put the BreezeLite Mini PC into the control panel and the NUC in the robot.

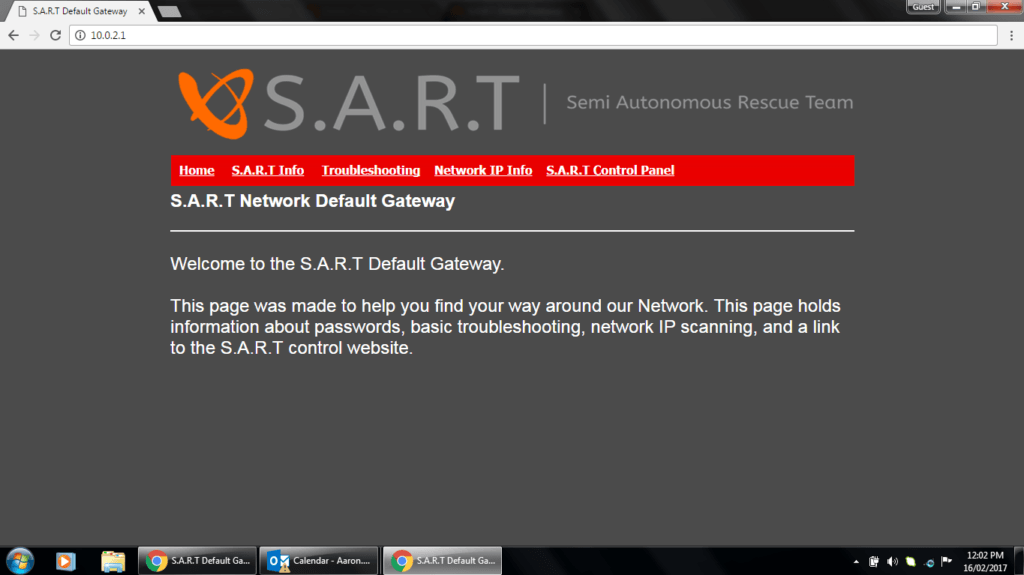

Jack had been working on the control panel interface, integrating a camera stream, an SSH console, system monitor/battery levels as well as motion controls into a web interface while Ryan worked on the remote control and communication aspects of the robot with Python and WebSockets. Aaron busied himself with testing and implementing the best network configuration optimised for low latency and high speed, including writing his own default gateway landing page.

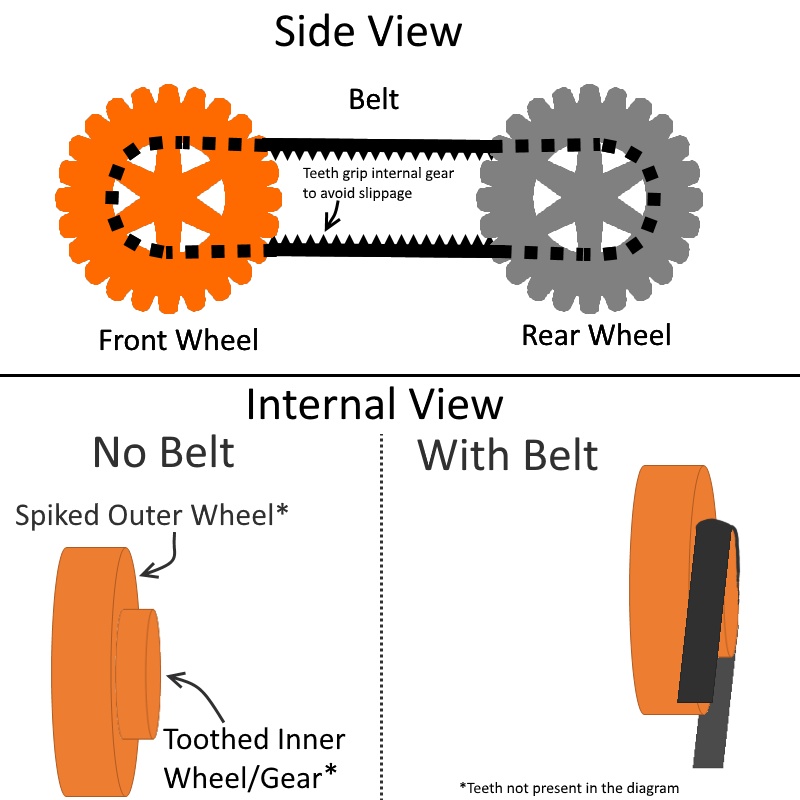

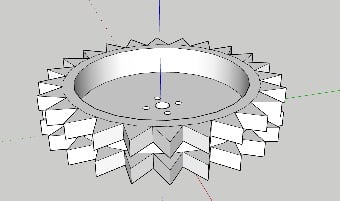

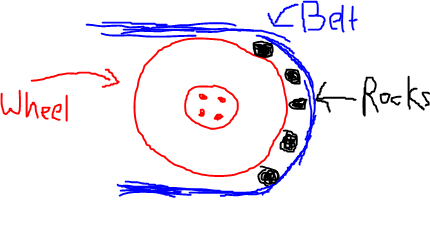

With the robot chassis mostly completed, I found myself playing around with different wheel designs. I had taken feedback from Riley after he returned from Germany, and he said that having a belt to transfer power between wheels would be beneficial for some of the maze sections in the competition. Aaron, Jack and Ryan unanimously agreed, so I researched how much it would cost to have a pair of belts made for a remote control tank.

With my eyes bleeding at how expensive they were, I looked into alternative methods and stumbled across a relatively new type of 3D printer filament. NinjaFlex, as it was rather aptly christened, was a rather rubbery filament compound that could be heavily deformed and would spring back into its original shape. We bought some immediately and I threw together a section of belt track that we could use to test the physical attributes and capabilities of this new wonder-material. I modified the 3D models of the wheels we had been using up until this point to include a cut-out down the centre for a thin belt to travel.

After printing two belts (one for each side) and about five minutes of testing, we discovered that while having a pair of belts connecting the wheels and wheel spikes would cause more problems than it would solve. We quickly abandoned that idea.

As the beginning of the competition loomed ever closer, it was time to begin putting everything together for the final time. It was a huge effort to get all the necessary components squeezed into the chassis, but with the help of the hammer, Dremel and a toothpick, we managed it. Now, because we were 17 and high on copious amounts of dopamine after this project we’d been working on for so long had finally come to fruition, Riley suggested we film our own version of Release Day by RocketJump to celebrate the end of this development phase. Sadly, nothing came of it (and hopefully never will – I don’t think I could survive the cringe), but we still have the raw footage… somewhere.

We then threw everything we thought we might need for any upgrades or emergency repairs during the competition haphazardly into a case, sealed the completed robot into its specialised carrying case and set off into the sunset, unknowing of what was to come.

Fortunately, you do not have to wait to find out what was to come.

You can read Jack’s competition recap blogs, or watch the vlog of our experiences in Japan.